Direct Cache Manual

The Direct Illuminance Cache

The direct illuminance cache ('direct cache') is an additional module to the RADIANCE lighting simulation software suite. Dependent on scene detail and parameter settings, the direct cache enables to significantly speed up the RADIANCE indirect light calculation by caching and reusing values from the direct illuminance calculation.A general description is contained in the article 'Direct illuminance caching - a way to enhance the performance of RADIANCE' (Int. Journal of Lighting Research a. Technology, Volume 34, issue 4, 2002). This manual focuses on the use of the direct cache, its adaption to specific needs by parameter settings and the possibility to disable it for certain exceptions. In the end, some further implementation detail is given, too.

| Content | |

| 1. | Introduction |

| 2. | Users guide |

| 2.1. Parameters | |

| 2.2. Object and ray exclusions | |

| 2.3. Remarks on parameters and exclusions | |

| 2.4. Direct caching and parallel processing | |

| 3. | Some further implementation details |

1. Introduction

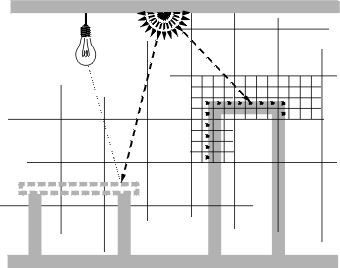

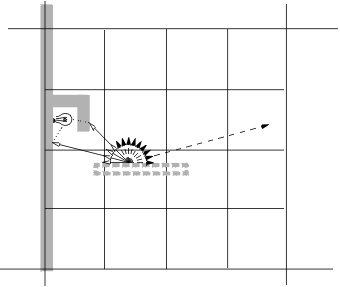

In short terms, the direct cache contains a representation of the scene calculated from direct illuminance only. To be exact, the stored values are in fact luminances resulting from all incoming direct light contributions. The idea becomes clear when looking at Fig. 1, showing a demonstration scene and its corresponding direct cache.

|

|

|||

| The demo scene | The corresponding direct cache. |

The results from the direct calculation are stored in an octree structure, i.e. the scene is subsequently divided into eight octants and sub-octants etc. a certain number of times, until a specified resolution is reached. Each of these subcubes (the 'leaves' of the octree) contains one illuminance value which will be reused by other ambient sample ray hits occuring therein. As the subcube has a finite dimension, it may of course contain sections of several objects or regions with varying surface normals. A simple surface normal check, assuring that values calculated for the front of an object are not used for a ray hit at the back, proved - in general - to be most efficient in adressing this topic (cf. the comments in the paper). So, altogehter, most ambient sample rays sent out into the scene now receive the information about direct illumination mainly from the cache established at the beginning of the sampling process rather than from a time consuming shadow testing procedure for each single ray hit. Experience so far showed that rendering time can be reduced by factors of between 2 and 4 by this. As it is a simplification, there are exceptional cases for which the direct cache is not appropriate (esp. for highly specular materials whose specular reflection shall contribute to the illumination). To account for the exceptions, the direct cache can be suitably disabled.

2. Users guide

2.1. Parameters

The direct cache is intended to be an integral part of the ambient calculation which works silently in the back and automatically adjusts itself to the specific scene characteristics. This works for the overall majority of cases. But as the range of RADIANCE applications is extremely wide, manual tuning with parameters may be necessary in certain cases.For a better understanding of the ways to manipulate it, some more words about the underlying procedure may be helpful: The octree refining is done in two stages, producing blocks of subcubes which are allocated at once and subsequently filled with data. The first values which are stored act as 'test values'. The relative illuminance difference between those test values is determined and serves as criterium for final resolution needed for storing, which may vary for each block. The start value for the resolution and the variation threshholds (three values) which activate and control further refining are set internally or may be given by the user.

Consider the following example: The scene shown above has a bounding cube of 10.2 m side. Refining this (subsequently dividing by 2) leads to cubes of (roughly) 5.1 m, 2.55 m ... 0.16 m, 0.08 m, ... and so on. The algorithm tries to start with a resolution better than 0.1 m, so in this case the 0.08 m will be chosen, which means 7 steps in octree refining. If the illuminance variation between the first test values exceeds the first threshhold value, the block will be refined one step further ( 8 steps, 0.04 m subcube-side), if it exceeds the second (third) threshhold, the block will be refined 2 (3) steps further, producing subcubes of 0.02 (0.01) m side. So altogehter the resolution may vary between four different values dependent on the detected illuminance variation.

So there are three important parameters controlling the direct cache resolution:

| -Dr (val) | The desired start value for the subcube sizes. The actual subcube

size depends on the size of the scene bounding cube because of the chosen octree refining procedure, so

the parameter is interpreted as an the upper limit. The algorithm tries to set a start value for the

subcube size being not greater than this. Remember that three steps of further

refining are possible, so the start resolution may well be rather coarse. If -Dr is not set,

by default the start value is determined automatically dependent on the scene bounding cube size,

resuting in -Dr = 0.10 m for scenes < 30 m -Dr = 0.15 m for scenes < 100 m and -Dr = 0.002 times the scene bounding cube sidelength for scenes > 100 m |

| -Dt (val1, val1, val3) | The threshholds for relative illuminance variation which control further refining. Often, high settings suffice, allowing higher resolution only for the regions where really big illuminance differences occur. Default settings are 0.6, 1.7, 4.8. |

| -Dv (val) | The (integer) number of test values used for the flexible resolution adjustment. The default setting is 4. It may happen that a big difference in illumination is not 'grasped' by the first 4 ray hits in a certain region, raising the number of testvals then helps in more accurately sample the local variation. (The feature is still somewhat experimental. The maximum value is dependent on the resolution and the internal storage handling, resulting in 16 for start refining depths of 7, 10 for depths of 8, 6 and 4 for depths 9 and 10. But the start refining depth depends on scene size and is not known a priori, so the value will be adjusted automatically if it is set too high.) A setting of 0 will disable flexible resolution adjustment. A setting of 1 makes no sense and therefore will be rejected. |

Another parameter controls the general activaton of the direct cache. By default, direct caching is used for the ambient sample rays only. It may be switched off completely or switched on for the direct calculation as well for test runs. The latter setting enables the user to actually "see" the direct cache subcubes (esp. if the test run is performed without ambient calculation) and thus helps in judging if the current resolution settings are appropriate. One should not be confused by the "unsharp" appearance occuring here and there at the borders of the objects. A rather lax handling of the surface normal checking is responsible for that. As long as no other objects are nearby and no really big illuminance differences occur, these deviations have negligible effect on the accuracy, and it simply isn't worth to spend too much rendering time on them. (although this would be possible by adjusting the parameters ....)

Furthermore, in analogy to the ambient treatment, the values contained in the direct cache may be written to a file, too, and be reused in further renderings of the same scene. Generally, the direct cache fills up very quickly, so filewriting is not as important as it is with the ambient values. When using cached values from a file, it is important that the resolution settings remain unchanged.

| -Da (char) | General activation of the direct cache. 'o' switches off direct caching completely 'p' (preview) activates it for the direct calculation as well 'n' - the default - means normal mode, the cache is used for the ambient sample rays only. |

| -Df (string) | Name of a file to write direct cache values to (or to read them in from a previous calculation). The start resolution parameter (-Dr) has to be the same in order to reuse the values from the file. If -Df is not set, no filewriting occurs (default). |

| -Dn (val) | The allowed normal deviation (angle in degrees) for values from the cache to be used for the current rayhit. The default setting is 90 degrees. Of course, smaller values will lead to higher accuracy, but for the usual scenes the effect is negligible and not worth the more on time needed (less values can be reused, so more shadow rays are needed). The parameter is nevertheless kept for the sake of completeness. |

2.2. Object and ray exclusions

As already mentioned, the direct cache is a simplification which is not appropriate in certain exceptions, namely the cases where the 'directional characteristics' of objects are vital, which applies for highly specular objects or the BRDTfunc-materials. In most cases however, an additional specularity does not contribute significantly to the overall illumination (for the irradiance-calculation, all materials are replaced by pure lambertian reflectors anyway), so considering the directional properties of objects within the ambient calculation really is a rare case. In such a case, however, the only sensible treatment is to exclude the special object from the direct cache. This is done by means of special object flags. The integer arguments were chosen as a simple means to hand over these flags to the objects themselves. Setting the first integer argument to '1' will exclude this object from the direct cache and activate exact shadow testing for it. Consider the following example:| brdf_mat polygon tabletop 0 1 1 12 0.0 0.0 0.7 ... ... ... ... |

!genbox brdf_mat tabletop 0.5 1.0 0.02 -I 1 1 |

The direct cache is used by default for plastic, metal, trans, their anisotropic counterparts and all brdf-materials, although esp. the latter is to some extent a contradiction in itself. But this is the most flexible way. People using brdf-materials can be assumed to be experts who know when the directional effect is important/big enough or whatever to be considered in the ambient calculation as well.

A second form of object exclusion affects the ambient sample rays emerging from it. This was firstly introduced to overcome the effects of a limited resolution. Within the new, flexible resolution cache it has become more or less obsolete except for very big scenes, where locally the problem still may play a role (due to the fact that a sufficient resolution may not be achieveable because of insufficient memory), or otherwise strange cases.

Generally the direct cache works effectively because of its finite resolution, only then the chance of several rayhits falling into one subcube occurs. Imagine now a region of a scene with intricate small geometry detail combined with small light sources producing high illuminance variation in it. In this case, direct caching may be driven to its limits, and raising the resolution to ever higher values will in the end bring now time benefit anymore, so it is best ot treat those exceptional regions with accurate shadow testing. As said, this effects the way the illumination is calculated for a special object, and it is controlled by the second integer argument. If set to 2, all ambient sample rays emerging from this object won't make use of the direct cache, if set to 1, only short ambient sample rays hitting objects nearby will rely on exact shadow testing, the others, sent out far into the room, may well use the direct cache without resolution problems. The minimum raylength for this 'vicinity exclusion' is set with the global parameter -Dl.

Last, but not least, a third way of excluding something is offered, this time effecting the rays themselves, namely the ambient supersample rays. This feature, too, is a concurrent way to raising the resolution. You can specify the fraction of the number of ambient supersample rays for which exact shadow testing will be allowed. This may help to increase calculational accuracy in scenes with overall high variation of illuminance which can not -for what reason ever- be tackled by the parameter settings mentioned above (resolution/variation threshholds/testval numbers). The fraction of rays which are treated with exact shadow testing will be taken form those rays which are sent out to the regions giving the highest contribution in illuminance.

| -Ds (val) | Supersample exclusion. This fraction (0 < Ds < 1) of all supersample rays will be treated with exact shadow testing. | |

| -Dl (val) | the minimum raylength for the 'vicinity exclusion' mentioned above. If the first integer argument of an object is set to '1', ambient sample rays emerging from this object shorter than the -Dl setting won't use the direct cache. |

2.3. Remarks on parameters and exclusions

At a first glance, this all may seem a lot. This is a tribute to the wide range of different applications which can be succesfully treated with RADIANCE. But normally, the automatic default settings satisfy the usual requirements, so for the first uses of the direct cache addon, you may well just not bother about it and rely on the internal adjustment. In addition, the matter itself is relatively straightforward, and if you did understand the standard RADIANCE parameters and their meaning, there should be no problem with the direct cache ones at all. I tried to keep them as intuitive as possible (e.g. setting the resolution directly in m) although this has some disadvantages in other respects. Here, an apology has to be directed to all non-SI units users. If you use feet or some other dimension for the length units, you have to set the start resolution value accordingly with -Dr.Of course, everything is still a work in progress, and not until gaining more experience in various cases of rendering tasks it will be possible to judge if the given parameter set really suits the needs. And especially very big scenes will still be challenging to the direct cache due to their high demand on memory. This is the reason why rather coarse values are set by default for the start resolution for large scene bounding cubes. They correspond to a start refining depth of 9, with the additional refining dependent on illuminance variation locally depths of 12 may be reached. Based on my experience so far, this means memory requirements of 200 MB and higher. If you have lots of RAM at your disposal, you're of course encouraged to try out higher resolution settings.

2.4. Direct caching and parallel rendering

Currently, sharing of the direct cache file between several processes (like in the ambient case) is not possible. Because of the quick filling of the cache, the situation is different anyway. The ratio of the time needed to distribute and integrate values from other processes to that needed locally for the calculation is certainly higher, the benefit of sharing thus lower than in the ambient case. So for the time being it is the best way to specify a local direct cache file file for each separate host, if filewriting should take place. A common file should not be chosen, as it would mean several times the same values to be written to it, apart from the usual problems of corrupted data in case of uncontrolled simultaneous acces to one file.3.0 Some further implementation details

At a first glance, the direct cache seems to be a straightforward counterpart to the already existing ambient cache. But there are two important differences: The number of values as well as the number of calls of the direct cache lookup function exceeds the corresponding ambient counterparts by far. This automatically implies that a quick delivering of a value is crucial, two. For these reasons, the - rather simple - scheme with blockwise preallocation was established. It minimizes the number of malloc() calls and avoids having the data float freely in memory. Interpolation was deliberately omitted. The figures in Sect. 2.2 show the schematics of the currently used procedure: An ambient sample rayhit triggers the memory allocation for a block of subcubes which are subsequently filled with data. The simplicity of the algorithm assures quick execution, but unfortunately comes with one disadvantage, namely a larger memory overhead. In other words, the direct cache deals memory against speed, and therefore can be viewed as an adaption to modern hardware standards. Today's PCs usually have a lot more RAM available as years ago when RADIANCE was developed.Luckily, even in case of blockwise preallocation one benefits from the fact that a lot of space within the scene bounding cube remains empty (in usual scenes), so a lot of blocks need not to be allocated. This effect can even be seen in the two-dimensional illustration. But the underlying indexing of the subcubes as well as the subcubes of a block which remain `empty' (not filled with data) increase the necessary amount of storage. To counteract this, a `storage reduction' factor is introduced, which limits preallocation to a fraction of the total number of subcubes a block normally would contain. (think for example of a plane cutting through a block of N^3 cubes, only N^2 of them will be actually needed if no other objects are present). If more storage space is needed, it will be allocated later during the process. Nevertheless, even with this reduced preallocation at high resolutions the storage requirement will become quite large. (There is of course the 4 GB barrier for the integer values in 32-bit systems, but because of a twostage way of indexing this can be circumvented, so the memory is the real limiting factor.) To some extend however, overhead is a general problem of any octree implementation (consider the pointers at each branch in a classical method), it is a price to be paid for having quick access to the stored values. To use the direct cache, one does not need to bother about this, as the block size and the storage reduction is set automatically. In the new flexible version there is less freedom to override the default settings compared to the former fixed value appoach. Some adaption can be made with help of the mentioned conversion factor, which is adjustable through the -Dc setting (0 < Dc <=1). Small values (but not smaller than 0.1, this hardly makes sense) will reduce preallocation to the (or less than the) minimum, resulting of course in increased reallocation during the run. (If you use the fixed resolution scheme (when -Dv = 0), the blockdepth may also be manipulated with -Db (integer, 1<= Db <= 5). In this case, the setting has of course no influence on the resolution, only on the way the maximum refining steps are divided between the blocks and subblocks.) The -Dc setting has some influence on the possible amount of testvalues, so if you get a corresponding error message, -Dv has to be reduced. Bothering about this is not necessary at all for the usual application, as preallocation is already automatically reduced for high resolution settings. The parameters have been important at the beginning of the development and are still available for the sake of - well, one never knows.. For the interested ones, compiling the code with -DINFO will activate the output of some statistics about the number of values, allocated blocks and required memory.

27.08.2002 CB

by

AMcneil

—

last modified

Feb 29, 2016 12:26 PM